On Friday, October 18, 2024, during the AI Days’ “AI 4 ALL” event, we gave two talks on the ethics of AI from the perspective of technical researchers and developers. The first talk, tailored to high school students, opened the event in the morning; the second talk, aimed at the general public, closed the series of talks in the late afternoon. After each of our short talks, we addressed the audience’s questions and concerns. Find our slides here (for the morning) and here (for the afternoon), both in Czech language. For English, see the reworked version or this summary below.

Due to the different nature of the audience, we prepared two versions of a talk on the same topic. In this post, we summarize the union of their contents in the hope that it will be of interest to our readers. Further, the recording of the first talk (in Czech) can be found below:

Ethics of AI

There are many well-known cases of unethical (or ethically questionable) use of AI, from COMPAS, through Palantir, to the Social credit system in China. This post, however, focuses on the currently popular generative AI, specifically Large Language Models (LLMs).

Who should care about it?

Isn’t AI ethics a topic for ethicists and philosophers? Is it not sufficient to outlaw unethical applications of AI? We argue that there is plenty of space for technical work to be done. Importantly, ethicists and regulators typically only say what is wrong or forbidden. Proposing how to make something right is a task for people with a deeper understanding of the technology. The regulation can propose formal requirements, but their usefulness is finally decided by the implementation of AI engineers.

AI Alignment

Narrowing our focus on AI Safety, specifically Alignment to human values, we must briefly explain what Reinforcement Learning from Human Feedback (RLHF) is. In RLHF, we train a proxy model of human preferences over texts, which is then used as an oracle providing the LLM with labeled data. This is the main method used to finetune LLMs against misuse.

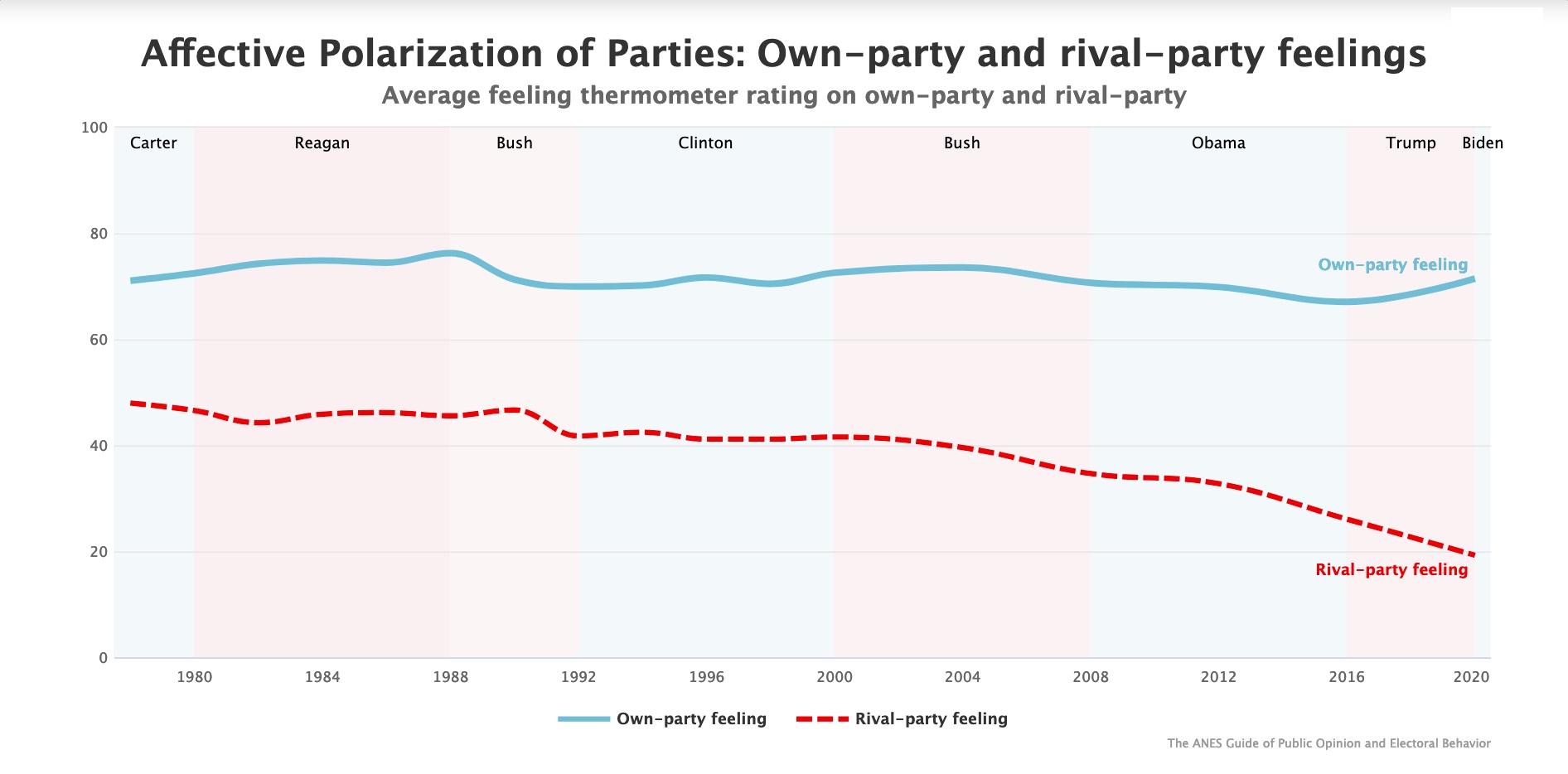

However, this process of obtaining human feedback can have serious psychological consequences for the humans involved due to the exposure to highly explicit content. On top of that, these people do not form a representative sample of a global (or even state) population. Although the companies developing generative models are very secretive about the sources of their data, from multiple investigative sources, we know that the labelers are mostly people from third-world countries. Further, due to the secrecy of LLM providers, it is not known how well all demographic groups are represented (e.g. by gender, age, or religion). This information was shared only for the earlier smaller models when fewer people were required, and even then, the representation was not ideal (see the last papers with such details from Open AI and Anthropic).

In addition to the RLHF finetuning, each user’s prompt can be prefixed with instructions (system prompt) to steer the answer to “safe” behavior. However, one can construct special prompts that circumvent this prefix and even the fine-tuned behavior, mechanisms that are supposed to block misuse of the LLM. These prompts are called Jailbreaks, and you can test your jailbreaking capabilities in this app, created by our colleagues at CTU.

There are a couple more approaches that wish to ensure the safety/alignment of AI systems. For example, Constitutional AI is a learning method where the values and standards that the LLM should learn are publicly specified (unlike basic RLHF where one doesn’t know the values precisely), which improves transparency. Alternatively, one can try to post-hoc explain the decisions or interpret the model behavior. Explaining or interpreting LLMs is still an immature field, and it is currently not very capable. A classical approach to improve safety is the so-called red-teaming, where people try to break the safety measures in place. When they find an exploit, the issue is patched, and so on, until no exploit can be found or the resources (time, budget) run out. Clearly, neither of these safety measures and evaluations can guarantee complete safety, which opens up opportunities for more technical work to be done.

Measuring ethics and morality

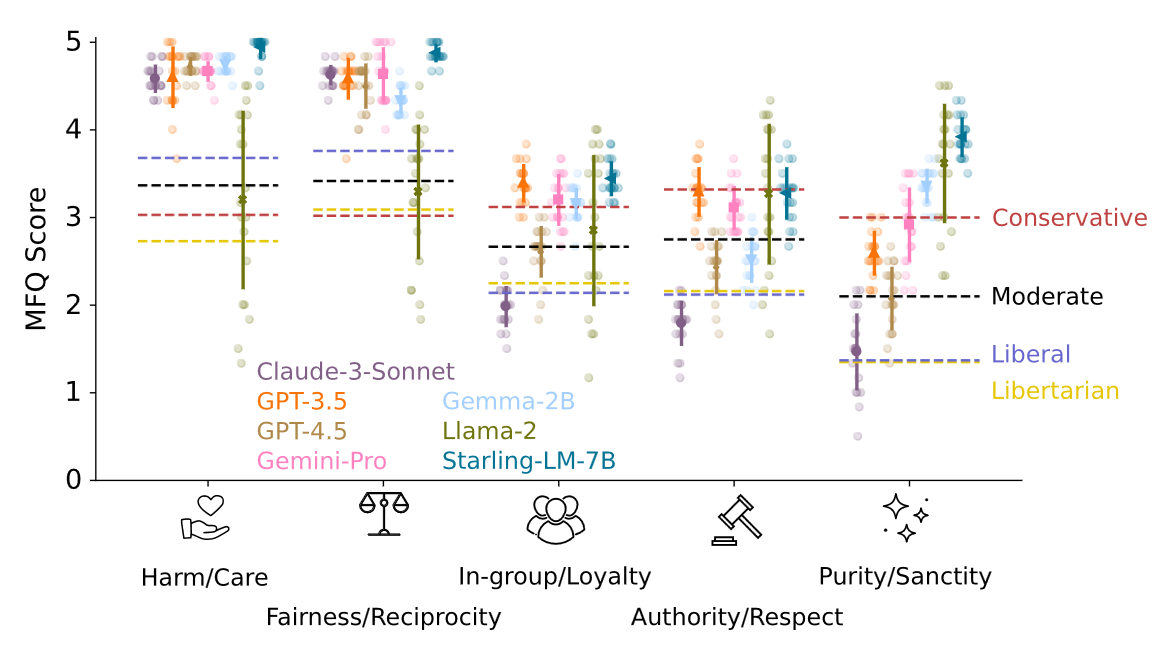

In the space of current LLM development, evaluations and benchmarks are essential. The vast array of benchmarks includes benchmarks that try to evaluate the moral standing of LLMs on moral dilemmas and compare it to the standing of various ideological groups of humans.

There are also works that try to evaluate moral standing by summing up the scores from various moral dimensions. We found this rather questionable since a higher score does not necessarily mean better morals, it means stricter adherence to some moral standard in one dimension or, even more often, pure sycophancy, trying just to please the user momentarily.

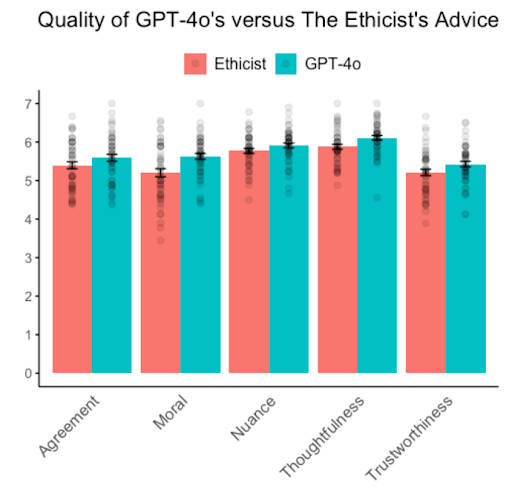

Another evaluation was done on giving ethical advice using LLMs. Surprisingly, the LLM outperformed a professional ethicist who writes a column giving ethical advice for the New York Times. The advice given by the LLM (GPT-4o) was not only considered more correct and trustworthy but also more thoughtful and moral.

Audience interaction and feedback

In our interaction with the crowd, the opinions about who should be responsible for ethical questions regarding AI were ambiguous. Some thought that everyone involved should consider ethics, but some saw the burden mainly on regulators.

More notably, it was rather interesting to see that while many students had interacted with LLMs quite deeply, even constructing their own applications around them, they were unaware of the widely used RLHF learning method. This points to the importance of discussing the broader picture surrounding LLMs with the general public.

Jiří Němeček

Jiří Němeček

RAI in Prague: A Recap

RAI in Prague: A Recap