On Friday, November 3, 2023, we organized a seminar mapping the landscape of Responsible AI projects in Prague. We were pleasantly surprised by the participants’ turnout. In the end, more than 50 people concerned with the responsible development of AI technologies came to listen and discuss the many different societal impacts of AI, not least thanks to being part of the AI Days, a week-long series of events happening across Czechia. The positive feedback we received will motivate us to continue with our efforts. This is a look back at the first part of the talk, while the recording of the whole conversation is available here.

What is Reponsible AI?

Responsible AI (RAI) is a catchy, overused phrase that can arouse passions. To some, it states an active commitment to societal impact considerations; to others, it evokes new responsibilities being pushed upon them on top of their agenda: in the end, the AI system cannot be truly responsible; only the developers can bear the consequences. To avoid unnecessary negative emotions, let’s rephrase Responsible AI to Responsible development of AI, a considerate dedication to the impact of our AI creation. commitment might come in two flavors: a negative one – restricting the AI systems from causing harm, and a positive one – designing systems to benefit our society and environment. Both of the approaches are covered below.

RAI is a useful umbrella term to cover AI ethics, safety, governance, environmental impact, and more. We encounter RAI whenever we question the morality, safety, security, and non-material costs of our to-be-developed intelligent systems. Because of the nature of such questions, it would be highly appropriate to discuss them (and make progress in them) with experts from the respective field in an interdisciplinary manner. This is where the first trap awaits – how do we find a common ground and language? Too many interdisciplinary attempts have had people from different backgrounds aiming their ideas in parallel directions with no intersection, achieving only multidisciplinary, independent contributions with little potential for collaboration.

Secondly, when we talk about RAI as a buzzword, let’s not forget about many international companies (Google, Microsoft, PwC, and Accenture, among others) adopting the phrase as their overarching term for their internal ethical guidelines, as well as a signal to the public that their AI affairs mean no harm to society. Similarly, political organizations such as the European Union or UNESCO take advantage of the term in preparing regulations and policies.

RAI Taxonomy

You already see that there are many approaches to the topic being discussed. To capture as many approaches as possible, I have divided RAI into seven areas:

- Ethics and Fairness,

- Safety and Alignment,

- Governance,

- Environmental Impact,

- Security and Privacy,

- Trustworthiness,

- Philosophy and Value Systems (or Mind and Values).

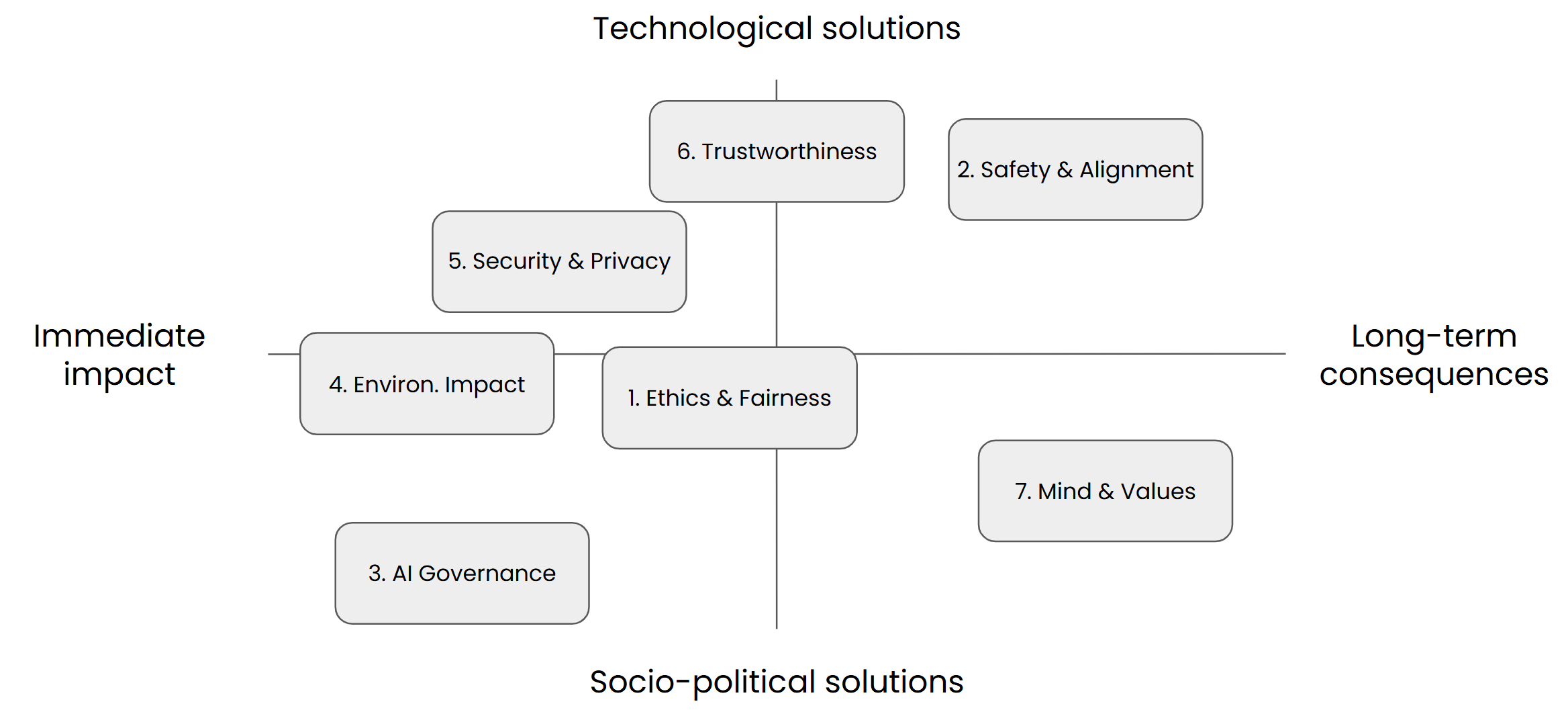

Further, I constructed a 2-axis diagram, with one axis describing the timeframe of the impact that a particular field (or the problems the field tries to solve) might have and the other axis referring to the type of solution the field currently offers. Below is my best attempt at positioning the seven areas onto the diagram. Note that the positioning does not represent my idea of an ideal state. Instead, it represents my view of the current state of the fields.

You see some areas, namely governance, environment, and ethics, more or less skewed towards the socio-political extreme. This stems from the fact that tech people still haven’t caught up in their interest or overall involvement in matters such as regulations and policymaking. On the other hand, security, trustworthiness, and safety are filled with technological suggestions for solutions, with AI explainability being one of the key objectives.

As for the impact axis, some areas have relatively long-term consequences in mind, such as safety caring about existential threats and philosophy caring about the implications of intelligent or even conscious machines. All the others would like to tackle present, rather urgent matters. Still, the reason for some of them being closer to the middle (ethics, trustworthiness) is our inability to resolve the issues effectively.

The fact that these areas have different focuses and preferences sometimes brings tensions. A most familiar dispute that arose from these tensions was the long-lasting disagreement between the fields of ethics/fairness and safety/alignment. Proponents of safety have sometimes been accused of “distraction from the important issues” by the ethics field, and vice versa, ethics and fairness might not be regarded as such a pressing issue by the proponents of AI safety. As a result, the general public had a chance to witness multiple personal attacks. I find this animosity sad and unconstructive. There is no reason why AI ethics and AI safety could not coexist. Sometimes the same tools might be useful in both.

Are you interested in the rest of the talk? Have a look at the recording below:

This concludes the summary of my talk about Responsible AI in Prague. I hope you enjoyed reading the post and learned something from it. See you at one of our following events.

Martin Krutský

Martin Krutský

Kick-off presentation, mapping efforts towards Responsible AI in Prague.

Kick-off presentation, mapping efforts towards Responsible AI in Prague.