On November 28, 2024, people from FEE CTU interested in Responsible AI gathered for a talk by John Dorsch from the Center for Environmental and Technology Ethics. He presented a thought-provoking topic exploring the relationship between algorithms and polarization. His presentation examined whether the algorithmic filtering employed by social media platforms contributes to ideological divides and what can be done to address it. Here, we unpack the key points from the event.

The Simplified Perspective

Dorsch began by addressing a pervasive opinion: algorithms create “echo chambers” where individuals are only exposed to like-minded perspectives. This oversimplified narrative suggests that algorithmic filtering is a primary driver of ideological division, reinforcing existing beliefs while minimizing opportunities for interactions of people with diverse views (also called cross-cutting exposure).

A More Nuanced View: Are Echo Chambers Really the Issue?

Drawing on recent studies, Dorsch argued that, despite regional deviations, only a small percentage of users (typically below 5%) truly exist in algorithmic echo chambers—with the number being higher in the US as opposed to the European countries. He highlighted that algorithms often reduce echo chambers by introducing users to content they might not actively seek. Further, the randomness in recommendations provides some exposure to opposing viewpoints as well, even if unintentional.

Furthermore, the definition of echo chambers matters. Typically described as consuming news from a single ideological perspective, this definition fails to capture the broader social interactions and motivations behind news consumption.

Affective Polarization

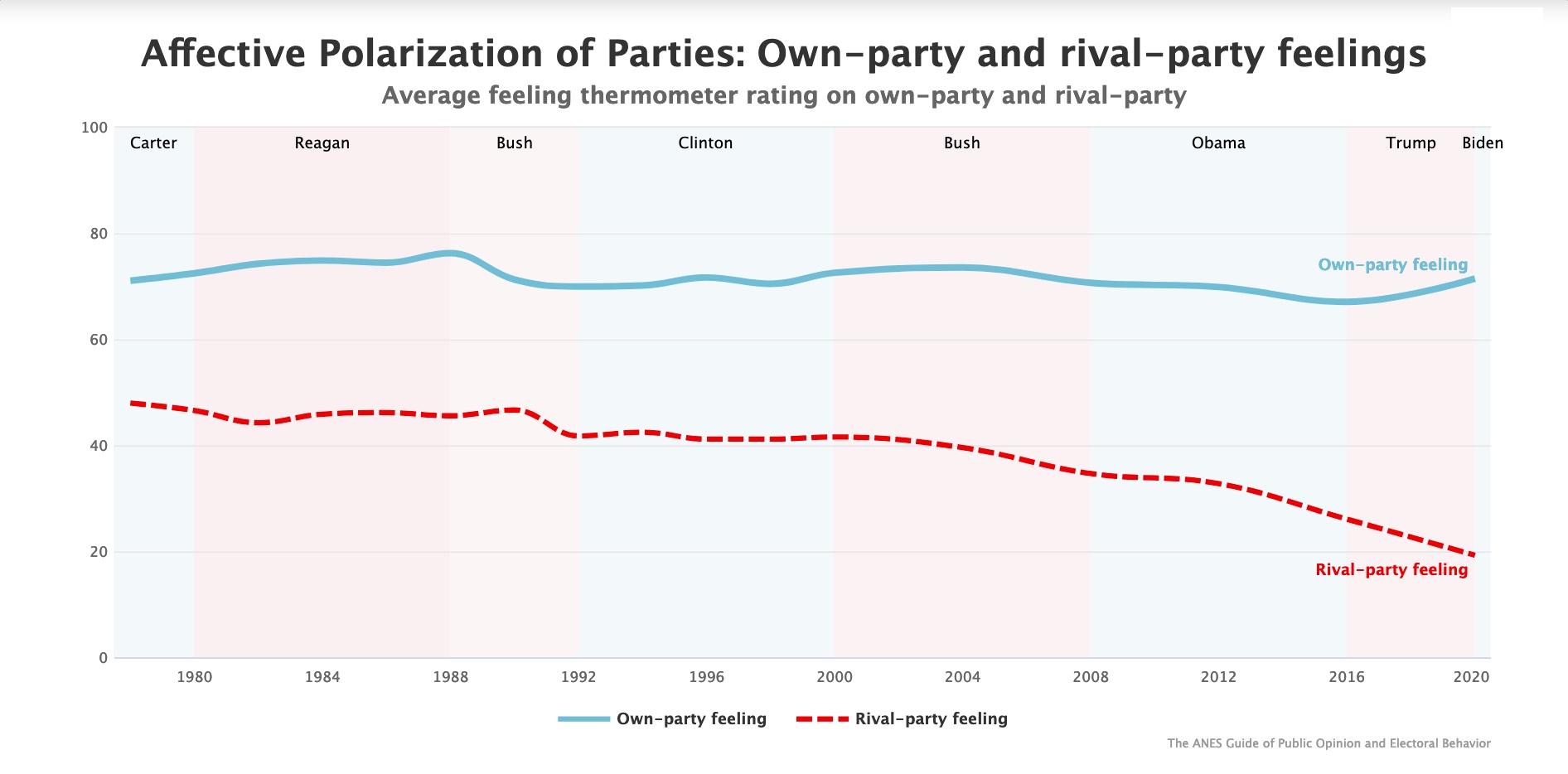

To offer an alternative explanation (to echo chambers) for the current dynamics on social media, John then introduced the concept of affective polarization—the growing animosity between individuals with opposing views. Unlike ideological polarization, which has remained relatively steady, affective polarization has intensified. Dorsch identified several contributing factors:

- Partisan Media Consumption: Many individuals gravitate toward ideologically aligned media, reinforcing negative attitudes toward opposing groups.

- Cross-Cutting Exposure: Dorsch noted the paradox that interacting with opposing viewpoints can sometimes increase negative feelings. Exposure to extreme or contentious opinions often backfires, deepening affective divides.

- Drivers of Partisanship: Traditional drivers such as social sorting and economic divisions remain significant. However, Dorsch pointed out that algorithms introduce new dynamics, like heightened exposure to extremes, exacerbating affective polarization.

Algorithms and Epistemic Agency

The next part of John’s talk focused on epistemic agency—the control individuals have over forming beliefs. He described two types of control:

- Intentional Control involves deliberate and goal-directed management of belief formation through sustained attention. It is susceptible to biases, especially in information selection—agents often choose sources that align with their beliefs and dismiss contradictory evidence.

- Evaluative Control is the automatic regulation of belief formation based on evidence and rational norms. Even then, it is prone to distortion by cognitive biases.

Dorsch explained how algorithms often exploit evaluative control by leveraging cognitive biases rather than promoting rational thought. He posed the critical question: can algorithms be redesigned to encourage virtuous norms over divisive ones?

A Path Forward

In closing, Dorsch proposed that social network technologies could play a significant role in mitigating polarization. By designing platforms to foster balanced exposure and curbing the spread of extreme content, these technologies could bridge divides rather than deepen them. He stressed that achieving this balance would require intentional design choices prioritizing societal well-being over engagement metrics.

Dorsch’s presentation offered valuable insights into the interplay between algorithms and polarization. While he acknowledged that algorithms are not entirely to blame for ideological divides, he highlighted their influence on how people consume information and interact with one another. The audience left with a deeper understanding of the challenges and opportunities in creating a more constructive digital environment.

For more information, refer to the review by the Reuters Institute for the Study of Journalism at the University of Oxford from which a sizeable portion of the presentation was sourced https://reutersinstitute.politics.ox.ac.uk/echo-chambers-filter-bubbles-and-polarisation-literature-review.

Martin Krutský

Martin Krutský

Ethics in AI Development: A Recap

Ethics in AI Development: A Recap